[Conference Paper] Person Orientation and Feature Distances Boost Re-Identification

Title: Person Orientation and Feature Distances Boost Re-Identification

Authors : Jorge Garcìa , Niki Martinel , Gian Luca Foresti , Alfredo Gardel , Christian Micheloni

Conference: International Conference on Pattern Recognition (ICPR)

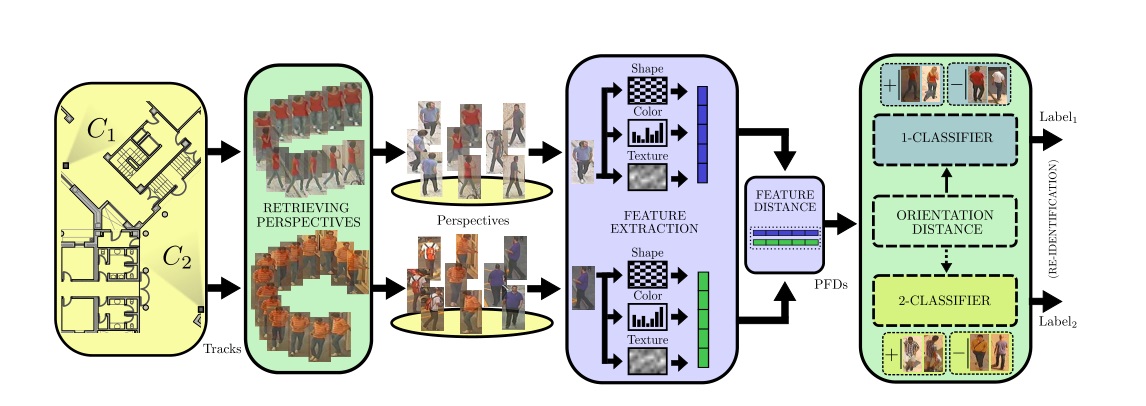

Overview of our approach. Given two tracklets of two persons acquired by disjoint cameras, we first recover the perspectives of the persons so as we

have multiple frames of a same person viewed from different poses/viewpoints. Then, for each image shape, color and texture features are extracted and the

PFD between image pairs is computed. Finally, the re-identification is performed by sending the PFD to one of the two previously learnt binary classifiers. The

classifier is chosen on the basis of the difference in orientation between the persons images used to compute the PFD.Most of the open challenges in person re-identification arise from the large variations of human appearance and from the different camera views that may be involved, making pure feature matching an unreliable solution.

To tackle these challenges state-of-the-art methods assume that a unique inter-camera transformation of features undergoes between two cameras.

However, the combination of view points, scene illumination and photometric settings, etc., together with the appearance, pose and orientation of a person make the intercamera transformation of features multi-modal.

To address these challenges we introduce three main contributions.

We propose a method to extract multiple frames of the same person with different orientation.

We learn the pairwise feature dissimilarities space (PFDS) formed by the subspace of pairwise feature dissimilarities computed between images of persons with similar orientation and the subspace of pairwise feature dissimilarities computed between images of persons non-similar orientations.

Finally, a classifier is trained to capture the multi-modal inter-camera transformation of pairwise images for each subspace.

To validate the proposed approach we show the superior performance of our approach to state-of-the-art methods using two publicly available benchmark datasets.