Research Areas

-

Surveillance Systems

-

Event analysis and Object Detection, Recognition and Tracking

-

Active Vision / Camera network reconfiguration

-

Data Fusion

-

Audio signal processing and recognition

-

Augmented reality

-

Social Web

Surveillance Systems

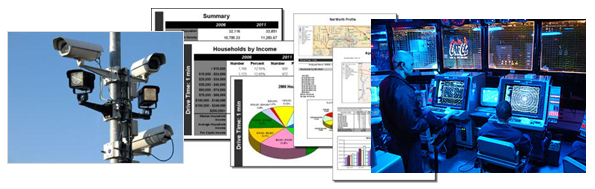

The AVIRES Lab has specific experience in the development of advanced surveillance systems. In particular, knowledge is available in the context of sensor calibration techniques, scene modeling and understanding, application dependent databases, real-time audio-video streaming. Specific methods for performance evaluation of surveillance systems have been studied at the AVIRES Lab.

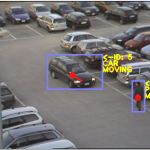

The AVIRES Lab has participated in the development of the prototypical surveillance systems installed at the sanitary landfill test-site of the Athena European project (remote surveillance of tele-operated vehicles in a risky environment). The AVIRES Lab has developed image processing software of different prototypical surveillance projects with SOCIETA’ AUTOVIE VENETE SpA (Italy) for surveillance of tollgates and motorway overpasses. The AVIRES Lab is active in the field of the intelligent surveillance systems for transport environments (unattended highway areas, unattended tunnels and bridge) and for security public environments (metro lines, railway stations, airports, etc.).

Event analysis and Object Detection, Recognition and Tracking

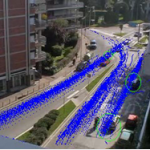

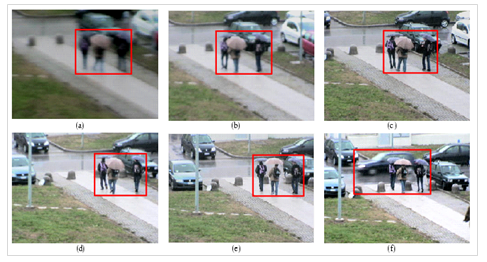

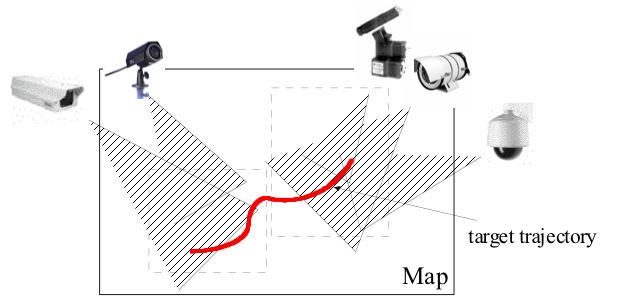

Change-detection and attention-focusing methods both for fixed and PTZ cameras have been intensively studied in order to reduce the complexity of data to be processed. The techniques have also been extended to the case of moving cameras such as camera-mounting moving robots or UAVs. Image processing techniques have been widely studied and developed, based on Statistical and Mathematical Morphology, Hidden Markov Models and Markov Random Fields (MRFs), Rank-order filters, Higher-Order Spectral Analysis. Edge and region extractors are studied as segmentation techniques: in particular, specific knowledge is available in extraction of complex geometric primitives (2D and 3D), perceptual grouping and highly structured patterns.

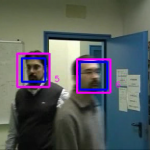

Recognition of complex objects in real environments (i.e., complex scenes) by meeting real-time requirements is a central task of current research in the computer vision area. Our studies in this sense are based on advanced neural networks (neural trees, neural forests, etc.) and clustering techniques for multi-class and complex data sets problems. The AVIRES Lab has developed specific techniques for object tracking based on distributed networks of Extended Kalman Filters (EKFs) and particle filters (PF) which allow temporal information to be used in an efficient way. Applications of EKFs or PF to the problems of intruder tracking, motion estimation have been studied which show the effectiveness of the proposed methods also in terms of real-time performances. In this context, knowledge is available in Depth from motion, Depth from Focus, Ground-plane based methods. Complex event analysis has been deeply studied both in terms of explicit event recognition and anomalous behaviors detection. In the first case graphical models have been used to represent the relations between several simple events composing a single complex scenario to be recognized. In the second case anomaly detection has been achieved by Support Vector Machines and more in general automatically-tuned kernel-based clustering techniques in order to distinguish the group of normal data from outliers.

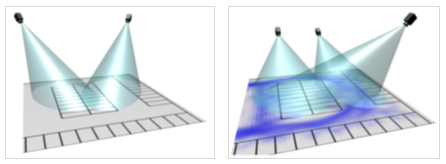

Active Vision / Camera network reconfiguration

Different approaches are investigated to address the problem of object detection and tracking with moving PTZ cameras network. Specific studies are focused on the selection of robust features useful for displacement estimation due to real object motion and/or camera motion. Different registration techniques are studied to register consecutive frames and determine the real motion occurred.

Active Vision should mean more than just using moving sensors. Therefore, the AVIRES Lab. Has expanded this concept by including techniques that allow to actively control the quality of the image acquisition with respect to an object of interest and for image processing purposes. Techniques for the automatic tuning of intrinsic camera parameters (focus, aperture and zoom) are also investigated with the objective of acquiring high quality images in outdoor scenes where illumination conditions can change suddenly.

Extracting valuable information from the images acquired by sensors is a challenging task and standard methods have always looked into such problem by proposing hand-coded methods. To tackle such issues and introduce novel methods, the lab is proposing novel machine learning algorithms where the high level information is learned from data.

Particular interest is focused in the area of deep learning: a sub-field of machine learning that is based on learning several levels of representations, corresponding to a hierarchy of features or factors or concepts, where higher-level concepts are defined from lower-level ones, and the same lower-level concepts can help to define many higher-level concepts.

Data Fusion

Data fusion is a process to combine information from multiple sources and sensors, which can be of the same type (homogeneous) or have different characteristics (heterogeneous). This technique makes it possible to improve the estimation of physical quantities observed and the environment in which they are acquired; the data obtained from the fusion are more accurate with respect to the evaluation of a single sensor, and the errors are, therefore, reduced. The rationale behind this theory is the capability of one source to compensate the error of another, offering advantages such as increased accuracy and failure resilience. Therefore, the performances of the systems that fuse multiple data coming from different sources are deemed to benefit from the heterogeneity and the diversity of the information involved.

Multimedia data can be either sensory (such as audio, video, RFID) and sensory (such as WWW resources, databases). Possible applications involve an increasing number of sectors. Just to mention a few examples, we can consider the benefits of the multi-sensor fusion for smart environments (use of audio, video, presence detectors), security (extension of space observed , control of sensitive areas), aviation (flight safety), in the automotive field (detection of pedestrians and road transportation, pre-crash), in process control. The fusion of different media may provide additional information and increase the accuracy of the general decision process.

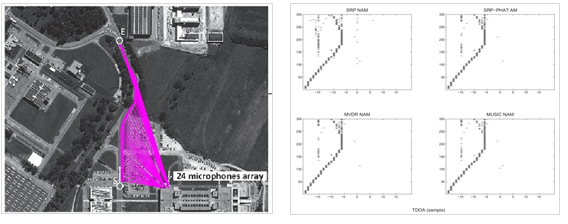

Audio signal processing and recognition

Acoustic scene analysis has an important role in the design and development of signal-based surveillance applications and multimodal interactive environments. The problem of single and multiple acoustic source localization using arrays of microphones has been extensively investigated with respect to both the near-field and the far-field analysis, and a number of new techniques and algorithms for the accurate estimation of Delay Of Arrival (DOA) estimation, fusion of information from distributes sensors, and tracking of moving acoustic sources have been proposed. Applications to which such techniques are being applied include vehicles tracking for traffic surveillance, indoor acoustic events detection and localization, control of acoustic sources for musical applications, events detection and classification for sensors steering and dynamic reconfiguration in intelligent environments.

The research activity of the AVIRES lab. is also concerned with speech signal processing for surveillance and security applications, and for speaker characterization. Investigations have been conducted in the field of robust multimodal speaker verification systems development, using both voice and facial cues analysis for secured area access. Recently, research in this field has also addressed physiological modeling of voice and speech for phonation and speaker characterization, with applications to voice analysis, voice quality assessment, and speaker recognition.

Augmented reality

Augmented reality (AR), intended as the enrichment of human perception through video, audio and other sense enhancements generated in real time by computers, is one of the fastest growing areas in industry. Google’s Project Glasses is one the most recent example proposed in state-of-art that exploits the combination of different sensors (e.g. audio, video, GPS, etc.) installed on a pair of glasses to perform augmented reality applications.

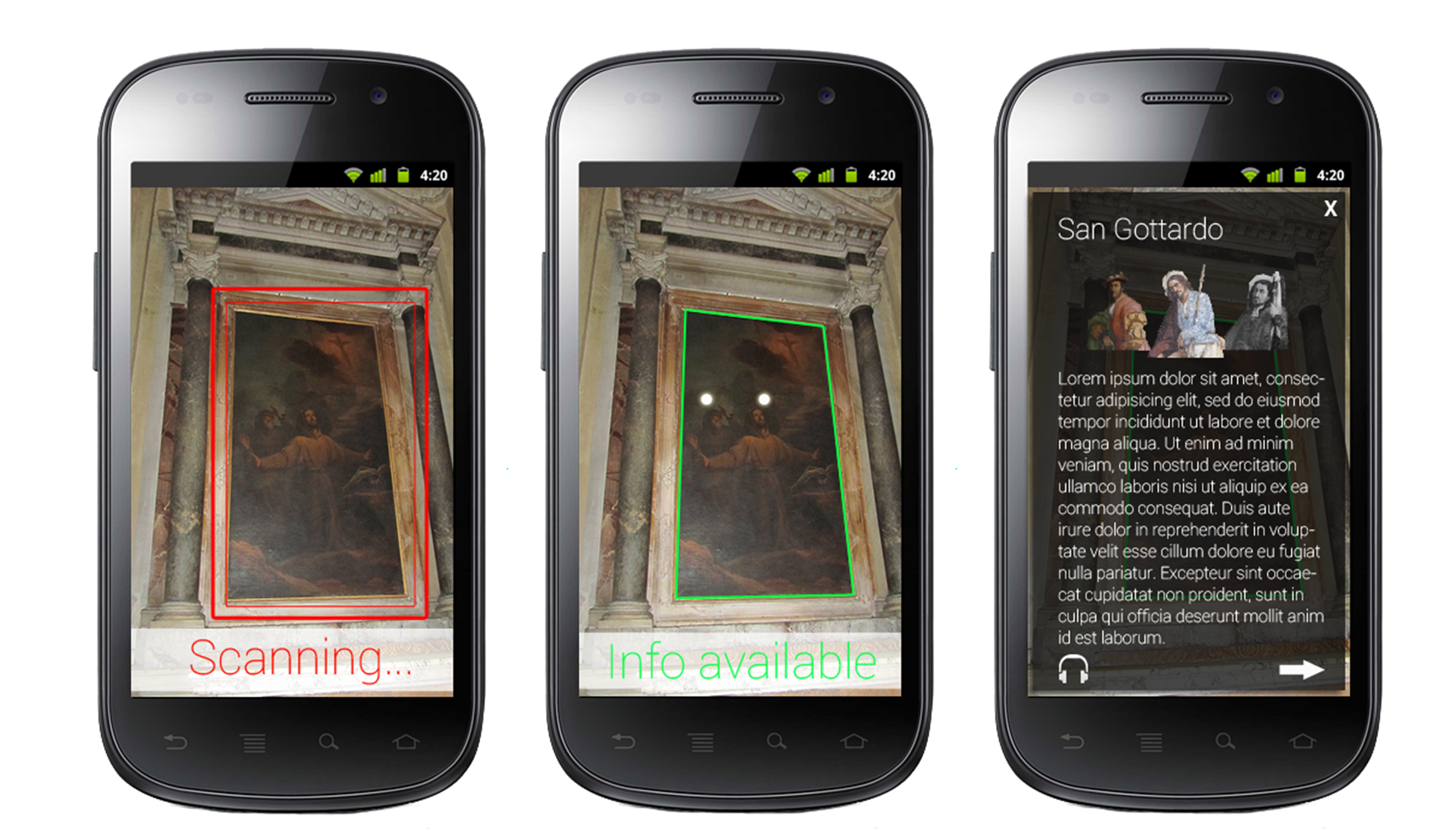

Nevertheless, the growing at rapid pace on the development of more and more sophisticated mobile devices has increasingly involved the development of applications that use augmented reality in combination with their integrated sensors with the goal to support users providing accurate real-time information. Seen the importance and the advantages of augmented reality, AVIRES Lab is leading on the development of several research projects in this important area. As an example, Museums are one of the most important environments considered for the proposed research. AR applications for Android smartphones devices have been implemented to support users during visits at museums. Paintings are captured by the user device camera and information are provided using augmented reality techniques.

Moreover, augmented reality experiments have been performed also to support users while moving in an external environment providing information on the proximity interest points. GPS and accelerometer sensors are used to calculate the position of the user and information are provided in real-time simply pointing and capturing the POI (e.g. monument) with the device camera. Other experiments deal to use mobile camera sensors to recognize suspicious persons using well-known state-of-art computer vision techniques. According with these goals, augmented reality is exploited to support vigilantes providing real time information. Finally, augmented reality experiments involve also HCI research aspects to explore and evaluate the proposals of new visualization techniques.

Social Web

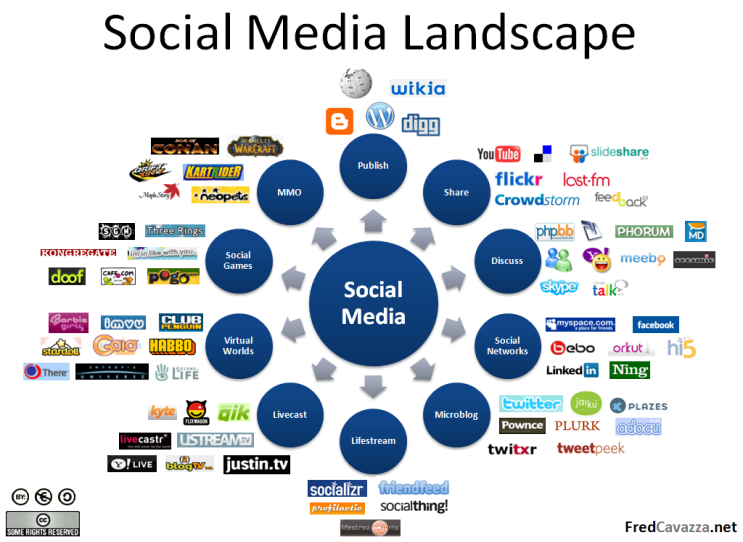

Society has been infiltrated by new digital technologies with potentially profound consequences and nowadays is clear that the web is not a separate sphere, but part of the same social reality about which scholars have produced several centuries worth of insight. People engage through and with digital media and in the last years some popular commercial social network sites, like Facebook, Twitter and Google+, are presumed to play a crucial role in the process of social change by means of interaction and connectivity. Digital media have not only reconfigured the entire media landscape and transformed older forms of mass media but have become integrated into the very fabric of social life in a variety of social, political and cultural domains.

Online social platforms have allowed digital media users to be more than just consumers of information, allowing the change to generate their own online content and create in this way personal websites, post comments on others’ blogs, upload their photos and their videos about a specific topic. For these reasons it is pivotal to analyze the involvement of users in this emergent digital culture and to address the ways in which digital media are being developed and used, and how they are transforming different spheres of social life. Furthermore it is important also to design and develop some specific tools, software and digital methods which allow to organize and analyze the vast amount of online data (posts, photos, tags, links, threads, APIs, etc.) and user generated content (UGC).